| 작성자 | 김명원 |

| 일 시 | 2024. 5. 23 (목) 18:00 ~ 21:00 |

| 장 소 | 복지관 b128-1호 |

| 참가자 명단 | 임혜진, 이재영, 성창민, 김명원, 장원준 |

| 사 진 |  |

사용할 라이브러리 호출

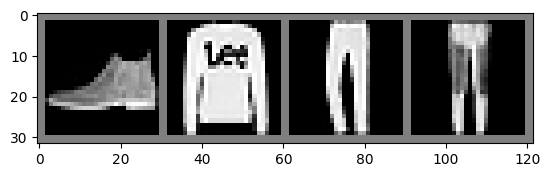

데이터셋 로드 및 전처리

신경망 정의

Loss, optimizer 정의

모델 학습

[1, 200] loss: 2.298 [1, 400] loss: 2.286 [1, 600] loss: 2.209 [1, 800] loss: 1.594 [1, 1000] loss: 1.080 [1, 1200] loss: 1.052 [1, 1400] loss: 0.866 [1, 1600] loss: 0.820 [1, 1800] loss: 0.724 [1, 2000] loss: 0.766 [1, 2200] loss: 0.767 [1, 2400] loss: 0.757 [1, 2600] loss: 0.722 [1, 2800] loss: 0.675 [1, 3000] loss: 0.702 [1, 3200] loss: 0.634 [1, 3400] loss: 0.669 [1, 3600] loss: 0.656 [1, 3800] loss: 0.653 [1, 4000] loss: 0.654 [1, 4200] loss: 0.620 [1, 4400] loss: 0.650 [1, 4600] loss: 0.550 [1, 4800] loss: 0.630 [1, 5000] loss: 0.620 [1, 5200] loss: 0.639 [1, 5400] loss: 0.578 [1, 5600] loss: 0.534 [1, 5800] loss: 0.579 [1, 6000] loss: 0.617 [1, 6200] loss: 0.569 [1, 6400] loss: 0.525 [1, 6600] loss: 0.547 [1, 6800] loss: 0.545 [1, 7000] loss: 0.531 [1, 7200] loss: 0.541 [1, 7400] loss: 0.551 [1, 7600] loss: 0.480 [1, 7800] loss: 0.466 [1, 8000] loss: 0.518 [1, 8200] loss: 0.555 [1, 8400] loss: 0.481 [1, 8600] loss: 0.515 [1, 8800] loss: 0.445 [1, 9000] loss: 0.530 [1, 9200] loss: 0.507 [1, 9400] loss: 0.451 [1, 9600] loss: 0.439 [1, 9800] loss: 0.468 [1, 10000] loss: 0.508 [1, 10200] loss: 0.486 [1, 10400] loss: 0.452 [1, 10600] loss: 0.485 [1, 10800] loss: 0.449 [1, 11000] loss: 0.463 [1, 11200] loss: 0.450 [1, 11400] loss: 0.509 [1, 11600] loss: 0.542 [1, 11800] loss: 0.470 [1, 12000] loss: 0.502 [1, 12200] loss: 0.493 [1, 12400] loss: 0.469 [1, 12600] loss: 0.453 [1, 12800] loss: 0.500 [1, 13000] loss: 0.404 [1, 13200] loss: 0.435 [1, 13400] loss: 0.441 [1, 13600] loss: 0.468 [1, 13800] loss: 0.453 [1, 14000] loss: 0.477 [1, 14200] loss: 0.403 [1, 14400] loss: 0.443 [1, 14600] loss: 0.386 [1, 14800] loss: 0.453 [1, 15000] loss: 0.428 [2, 200] loss: 0.381 [2, 400] loss: 0.448 [2, 600] loss: 0.417 [2, 800] loss: 0.377 [2, 1000] loss: 0.402 [2, 1200] loss: 0.398 [2, 1400] loss: 0.364 [2, 1600] loss: 0.380 [2, 1800] loss: 0.383 [2, 2000] loss: 0.365 [2, 2200] loss: 0.411 [2, 2400] loss: 0.413 [2, 2600] loss: 0.386 [2, 2800] loss: 0.395 [2, 3000] loss: 0.402 [2, 3200] loss: 0.396 [2, 3400] loss: 0.387 [2, 3600] loss: 0.389 [2, 3800] loss: 0.407 [2, 4000] loss: 0.369 [2, 4200] loss: 0.360 [2, 4400] loss: 0.405 [2, 4600] loss: 0.339 [2, 4800] loss: 0.356 [2, 5000] loss: 0.365 [2, 5200] loss: 0.335 [2, 5400] loss: 0.331 [2, 5600] loss: 0.401 [2, 5800] loss: 0.398 [2, 6000] loss: 0.396 [2, 6200] loss: 0.437 [2, 6400] loss: 0.414 [2, 6600] loss: 0.391 [2, 6800] loss: 0.378 [2, 7000] loss: 0.354 [2, 7200] loss: 0.378 [2, 7400] loss: 0.389 [2, 7600] loss: 0.361 [2, 7800] loss: 0.421 [2, 8000] loss: 0.341 [2, 8200] loss: 0.355 [2, 8400] loss: 0.394 [2, 8600] loss: 0.356 [2, 8800] loss: 0.401 [2, 9000] loss: 0.371 [2, 9200] loss: 0.360 [2, 9400] loss: 0.373 [2, 9600] loss: 0.355 [2, 9800] loss: 0.384 [2, 10000] loss: 0.347 [2, 10200] loss: 0.366 [2, 10400] loss: 0.415 [2, 10600] loss: 0.415 [2, 10800] loss: 0.410 [2, 11000] loss: 0.325 [2, 11200] loss: 0.344 [2, 11400] loss: 0.341 [2, 11600] loss: 0.358 [2, 11800] loss: 0.395 [2, 12000] loss: 0.351 [2, 12200] loss: 0.360 [2, 12400] loss: 0.316 [2, 12600] loss: 0.365 [2, 12800] loss: 0.324 [2, 13000] loss: 0.337 [2, 13200] loss: 0.327 [2, 13400] loss: 0.311 [2, 13600] loss: 0.343 [2, 13800] loss: 0.337 [2, 14000] loss: 0.354 [2, 14200] loss: 0.359 [2, 14400] loss: 0.323 [2, 14600] loss: 0.331 [2, 14800] loss: 0.352 [2, 15000] loss: 0.363

모델 저장 및 평가

드디어 마지막 모각코이다.

내가 공부하고 실습한 내용을 정리하면서

많은 공부가 됐고 앞으로 다시 필요한 내용을 찾을 때 좋을 것 같다.